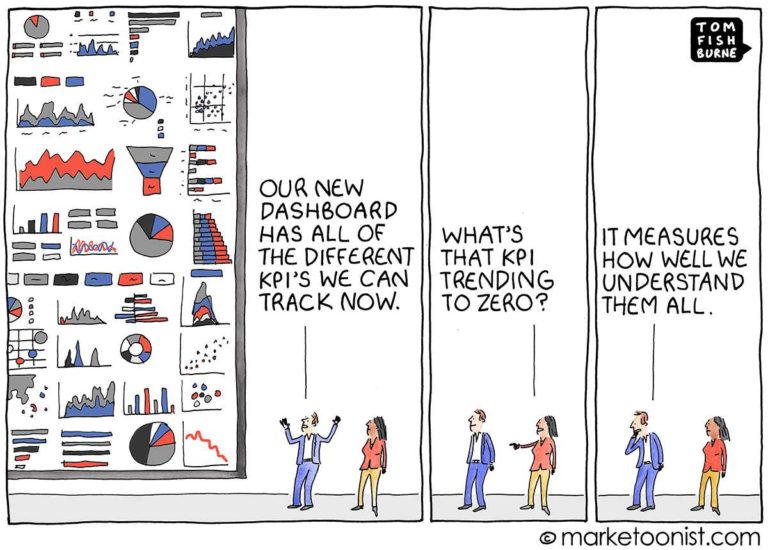

Wenn man nicht weiß, was man eigentlich wissen will, ist es (so denn das nötige Werkzeug zur Verfügung steht) sehr einfach, jede Mikro-Entwicklung im Unternehmen, jeden Prozess, jedes Ergebnis mit dem Tool der Wahl in bunte Charts zu überführen. So ist man eine Weile beschäftigt und hat den Kolleg*innen etwas vorzulegen, das allen klar vor Augen führt, dass hier jemand echt hart geackert hat die letzten Tage. Das Beste daran: Je mehr Charts man produziert, desto sicherer kann man sein, dass niemand sie ansehen und auf peinliche Fehler hinweisen wird. Triple Win: Die Kolleg*innen sind beeindruckt, die Vorgesetzten zufriedengestellt und man selbst kann sich zufrieden zurücklehnen. Voila!

In dieser Schilderung ist offensichtlich nicht nur die fragwürdige Arbeitsmoral des/der Protagonist*in das Problem, sondern vor allem die Tatsache, dass Datenanalyse hier rein um ihrer selbst willen betrieben wird. Und so hanebüchen die beschriebene Szene auch wirkt: Im Kern steckt leider bis heute ein Funken Unternehmensrealität. “Der Monat ist vorbei – da müssen wir wohl irgendwas berichten.” Schnell wird alles zusammengerafft, was an schicken Charts auffindbar (oder schnell produzierbar) ist, und die lästige Pflicht ist getan. Dabei hat die Analyse von Datenmaterial eigentlich einen ganz anderen Zweck als reine Beschäftigungstherapie für Produzenten und Rezipienten: Sie dient dem Erkenntnisgewinn – und zwar da, wo Erkenntnis zielführend eingesetzt werden kann.

Nein, auch Erkenntnisgewinn ist kein Selbstzweck

Denn: Mehr Information an sich ist zunächst einmal nicht mehr als genau das: mehr Information. In den allermeisten Fällen brauche ich aber nicht möglichst viel Information (s. dazu auch meinen Artikel Daten sind nicht das höchste Gut der Digitalisierung), sondern die richtige Information, um mein Handeln zu leiten bzw. Entscheidungen die richtige Richtung zu geben. Es geht also in der Regel nicht um Masse, sondern um Selektion – und die ist abhängig von der Zielsetzung der jeweiligen Nutzergruppe:

1. Zielerreichung tracken: Weniger ist mehr!

Egal ob klassische Umsatz- oder Gewinnvorgaben aus dem Konzern, Funnel-Ziele aus Vertrieb und Marketing oder interdisziplinäre Ziele aus den Unternehmens-OKRs: Wer zielorientiert arbeiten will, sollte sich auf einige wenige Kennzahlen fokussieren und nur diese in jeweils sinnvollen Intervallen mit dem Status Quo abgleichen. Ggf. können dazu pro Kennzahl ein paar (wenige!) Support-Kennzahlen im Reporting hilfreich sein, um mehr Kontextverständnis zu erlangen und dadurch unterstützende Prozesse genauso in Richtung Zielerreichung zu treiben wie den jeweiligen Kernprozess selbst.Hier gibt es ein Beispiel, wie derlei Reports aussehen können.

Das bedeutet: Um meine Zielerreichung zu tracken, brauche ich keine möglichst umfassenden Dashboards, die meinen Arbeitsfokus am Ende nur verwässern, sondern klar zugeschnittene Reports/Dashboards, die nicht mehr enthalten als die für mich relevanten Kennzahlen. Ausnahme sind lediglich Stellen, bei denen mehrere Ziel-Trackings zusammenlaufen, z.B. auf Konzernebene oder in der Vertriebsleitung als vorgesetzte Stelle mehrerer einzelner Teams.

2. Wegweiser für die Operative: Weniger ist mehr!

In der Operativen müssen Daten (mal abgesehen vom regelmäßigen Plan/Ist-Reporting, das natürlich auch hier relevant ist) in der Regel unmittelbar handlungsleitend sein. Ob Absatzzahlen, Margen und Retourenquoten für den/die Category Manager*in oder Impressionen, Klicks und Bestellwerte für den/die Marketing Manager*in: Das aufbereitete Datenmaterial beeinflusst in der Regel jeden Tag viele kleine und große Entscheidungen – und sollte deshalb klar auf die Aufgaben der betreffenden Person zugeschnitten sein.

Das bedeutet: Weder sollte ein Zuviel an Informationen in Dashboards und Reports den/die Nutzer*in von den drängenden Aufgaben des in der Regel eng getakteten Arbeitsalltags ablenken, noch sollte er oder sie gezwungen sein, sich die relevanten Kennzahlen durch aufwendige Analyse selbst zu erarbeiten. Ein guter Weg sind hier zum Beispiel individuell eingerichtete Alarme über relevante Entwicklungen (das kann in der Umsetzung zum Beispiel so aussehen) sowie vertiefende, dabei jedoch einfach gestaltete Reports, die die relevanten Informationen schnell erfassbar machen.

3. Beobachten, vergleichen, kontrollieren: Weniger ist mehr!

Zu unterscheiden von systematischem Ziel-Tracking (s. Punkt 1) sowie von aktiver Analysearbeit (s. Punkt 4) ist das reine Beobachten, Vergleichen und Kontrollieren von Entwicklungen anhand von analytisch aufbereitetem Datenmaterial. In leitenden Funktionen, in einigen operativen Feldern sowie natürlich im Controlling kann es sinnvoll sein, neben dem systematischen Plan/Ist-Reporting weitere Entwicklungen der verantworteten (oder mit dem eigenen Tätigkeitsfeld verwandten) Stellen zu beobachten sowie diese ggf. mit Entwicklungen aus z.B. Vergleichszeiträumen oder dem Wettbewerb zu vergleichen, um informiert zu bleiben und/oder zu gewährleisten, dass die Dinge den gewünschten Verlauf nehmen.

Das bedeutet: Zu derlei Monitoring- bzw. Controlling-Zwecken sind individuell konfigurierbare, einfach zu filternde Dashboards in vielen Fällen gut geeignet – konsumiert direkt im BI-/Analyse-System, per Email versandt im Tages-/Wochen-/Monatsrhythmus oder im Büro auf den großen Wandbildschirm geworfen. Die größte Falle in diesem Szenario sind dabei sicherlich spontane Launen von “Ach, das ist ja auch noch interessant!” – denn sind die Monitoring-Dashboards zu voll mit Informationen, geht mit der Übersichtlichkeit auch die Regelmäßigkeit der Rezeption flöten. Schnell verkommen sie zu Email-Spam, der bei Eingang sofort gelöscht wird.

4. Risiken und Potentiale entdecken: Mehr kann durchaus mehr sein.

Bleibt die aktive Analysearbeit – eine Aufgabe, die aufgrund der erforderlichen Expertise tatsächlich meist Spezialisten überlassen ist. In diesem Fall kann mehr tatsächlich mal mehr sein, denn mit mehr (oder gründlicher) analysiertem Datenmaterial steigt die Chance auf Entdeckung unerschlossener Potentiale bzw. unbemerkter Risiken. Dies gilt insbesondere, je weniger konkret die richtunggebende Fragestellung ist; ist die Analyse sogar rein explorativ, darf die Devise tatsächlich ganz unumwunden heißen: mehr ist mehr!

Das bedeutet: Wo aus größeren Mengen an Datenmaterial (noch) unbekannte Risiken und Potentiale herausgearbeitet werden sollen, kann im Prinzip gar nicht genug analysiert werden – deshalb liegt der Schlüssel hier letztlich darin, Maschinen arbeiten zu lassen, wo Menschen es auf effiziente Weise nicht mehr bewerkstelligen können. Faustregel: Je weniger konkret die Fragestellung (also je explorativer die Analyse), desto höher der Analyseaufwand – und desto größer der Shift vom Menschen zur Maschine.

Fazit: Kenne Deine Use Cases

Um diese vier Fälle voneinander zu unterscheiden und jeder Nutzergruppe zur Verfügung zu stellen, was sie braucht, um zielführend mit Daten arbeiten zu können, ist es von entscheidender Bedeutung, dass die entsprechenden Use Cases schon bei Aufbau der zugrundeliegenden BI-Lösung bekannt sind und in ihre Gestaltung aktiv eingebracht werden (oder bei einer fertig eingekauften, use-case-orientierten BI-Lösung wie minubo in die initialen Nutzerschulungen einfließen). Leider sind die BI-Projektteams der Unternehmen häufig noch sehr IT-lastig besetzt – ein großer Fehler, denn ohne Vertreter der Fachabteilungen kann keine use-case-orientierte Lösung entwickelt werden

(siehe zu diesem Thema auch die Commerce Intelligence Blaupause von minubo). Dabei möchte doch sicher niemand enden wie die Herrschaften im obigen Cartoon.

Wie immer freue ich mich über ein persönliches Gespräch zum Thema – kontaktiere mich ganz einfach unter lennard@minubo.com und ich melde mich schnellstmöglich bei Dir zurück.